Deploying Kubernetes using Terraform, Proxmox, Ansible, and K3s

I’ve been meaning to try Kubernetes for a long time, but I kept finding excuses. It’s been at the back of my mind marinating for yeaaars.

One thing I absolutely did not want to do was spend hours clicking through admin panels just to get something working. I recently learned there’s a term for this: ClickOps. I developed a strong aversion to this way of doing things when I transitioned to Cloud Engineering. Clicking things can get old fast.

Terraform + Proxmox

There is barely any overlap with what I do at my full-time job and my homelab projects. We use Terraform at work to manage AWS resources. I use Proxmox for all my servers.

I knew there was a Proxmox provider for Terraform, but I hadn’t seriously considered it. Seeing how vastly different the “resources” looked compared to AWS provider.

I gave it a shot, and it worked beautifully. There’s something exciting about finally bridging the gap between the tools I use at work and the ones I use in my homelab.

This solved my VM provisioning problem.

This is the only Proxmox provider I recommend: https://github.com/bpg/terraform-provider-proxmox. I ran into issues with all the others.

Ansible for configuration management

Next, I needed a way to handle configuration management. While Terraform can invoke Bash scripts, it doesn’t track configuration changes well. This led me to another tool I had been meaning to try: Ansible.

Prior to this project, I’ve been only reading about it in passing. No actual experience at all. This was a good excuse to try it out.

Wrapping up

My primary goal is reproducibility. What I want is the config I’ll write now, I can reuse later on. I can destroy, and rebuild to make it easy to experiment.

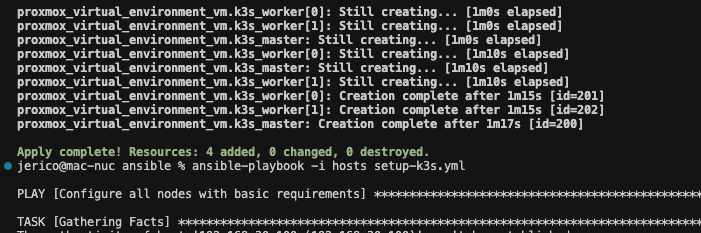

Here’s what the process looks like for creating a new Kubernetes cluster once Proxmox is up and running:

terraform init

terraform apply

cd ansible

ansible-playbook -i hosts setup-k3s.yml

export KUBECONFIG=./k3s.yaml

kubectl get nodes

That’s it! The setup is fully reproducible, and I now have a fresh Kubernetes cluster.

https://github.com/jerico/terraform-proxmox-k3s

Next step is actually running things on the cluster 😄